Best Hadoop Interview Questions and Answers for experienced

Hadoop Interview Questions and Answers

Learning the Hadoop course is just but the beginning of your career path in the field. Once you have the skills needed for the job, you will be in a position to apply for relevant skills. Nevertheless, regardless of how well you know about Hadoop, killing the interview is equally important.

One way of doing s is knowing what to expect and what the panellists will be expecting from you. That’s why this article is a must-read. It discusses the questions you should expect during a Hadoop interview and the best answers in each case. So, let’s dive into some of the most commonly asked Hadoop interview questions and answers without much ado.

What is Hadoop?

- Hadoop is a tool that has proven to have what’s needed to handle Big Data. The open-source framework combines various services and goods ideal for storing, managing, processing and analyzing Big Data. It explains why most businesses have settled for this tool, especially when processing huge amounts of data. Its efficiency and effectiveness have seen many businesses make the right decisions, thus steering their firms in the right direction.

Name some examples of companies using Hadoop

Some of the companies that can share their success story with Hadoop include:

- Uber

- The Bank of Scotland

- Amazon

- Netflix

- Adobe

- Yahoo

- Rubikloud

- Spotify

- Hulu

- eBay

- The United States National Security Agency (NSA)

Name the various Hadoop’s vendor-specific distributions

Hadoop’s vendor-specific distributions include:

- Hortonworks

- IBM InfoSphere

- Microsoft Azure

- Amazon EMR

- MAPR

- Cloudera.

Explain the three main components of Hadoop

Hadoop comprises the following three core components;

- Hadoop Distributed File System (HDFS): Hadoop’s storage system is designed to store a few big files instead of many small files.

- YARN: This is a framework responsible for processing and resource management such as batch processing. Additionally, YARN also provides various products with the right execution environment.

- MapReduce: It is a framework that processes large data sets simultaneously across Hadoop clusters.

Tell us about the YARN Components

There are four major components of YARN, and here is a summary of the same

- Container: It comprises various resources on a single node such as the HDD, network, CPU and RAM, just but to mention a few.

- Node Manager: Its responsible for the execution of every task on a single data node and usually runs on slave daemons

- Resource Manager: It controls the allocation of resources when dealing with a cluster and runs on the master daemon

- Application Master: It works hand in hand with the Node Manager, focusing on monitoring how various tasks are executed. It controls the job lifecycle of a user as well as the resources that a particular application demands during execution.

Hadoop can run in three various modes. Tell us more about them

- The standalone mode uses one Java process and the local FileSystem to run Hadoop services.

- Pseudo-distributed mode execute Hadoop services using a one-node Hadoop deployment

- Fully-distributed mode runs Hadoop slave and master services using separate nodes

What are the various configuration files in Hadoop?

- Hadoop-env.sh

- core-site.xml

- hdfs-site.xml

- mapred-site.xml

- yarn-site.xml

- Master and Slaves

What is Big Data?

- As the name suggests, big data refers to a collection of complex and large data sets. Due to the huge amount, it becomes difficult to process the data using conventional data processing apps or relational database management tools. Other than processing, capturing, curating, storing, searching, sharing, transferring, analyzing and visualizing Big Data is also difficult. That said and done, big data can be quite helpful when it comes to decision making.

Explain the 5 Vs that characterize Big Data

- Volume: This refers to the overall amount of data

- Velocity: It represents the rate at which the volume of data grows

- Variety refers to the various types of data, such as structured, semi-structured, and unstructured data. Data is also collected in various formats, including CSV, audios and videos, just but to mention a few.

- Veracity: It refers to the accuracy of the big data.

- Value: At the end of the day, the data need to be processed or analyzed. Its value will be top-notch so that the results post-analysis or processing can be good enough for decision making.

Which Java Versions are required when running Hadoop?

- As long as you have the 1.6.x Java version, you will easily run Hadoop. You can also go for versions higher than that, and you will run Hadoop seamlessly, no doubt.

Which operating systems support Hadoop?

They include:

- Linux

- Windows

- Solaris

- Mac OS/X

- BSD

Do you know how Hadoop differs from the traditional RDBMS?

Yes, and here are some of the factors that differentiate the pair.

- Datatypes: Hadoop processes unstructured and semi-structured data, whereas RDBMS processes structured data.

- Suitability: Hadoop is most suitable for processing unstructured data, storing big data and data discovery. On the other hand, RDBMS is best for handling complex ACID and OLTP transactions.

- Schema: In Hadoop and RDBMS, a schema is on read and write, respectively

- Speed: In the same vein, Writes are fast in Hadoop, and Reads are fast in RDBMS

Name the common Hadoop input formats

- Text input format: It is Hadoop’s default input format

- Key value input format: Made to read plain text files that are broken down into lines

- Sequence file input format: Designed to read files sequentially

List the three main steps necessary during the deployment of a solution handling data solution

- Data ingestion

- Data storage

- Data Processing

What are the different sources of data during data ingestion?

- Social media feeds

- Images

- Documents

- Flat files

- Log files

- RDBMS such as Oracle and MySQL

- CRM, including Salesforce and Siebel

- SAP or any other Enterprise Resource Planning System

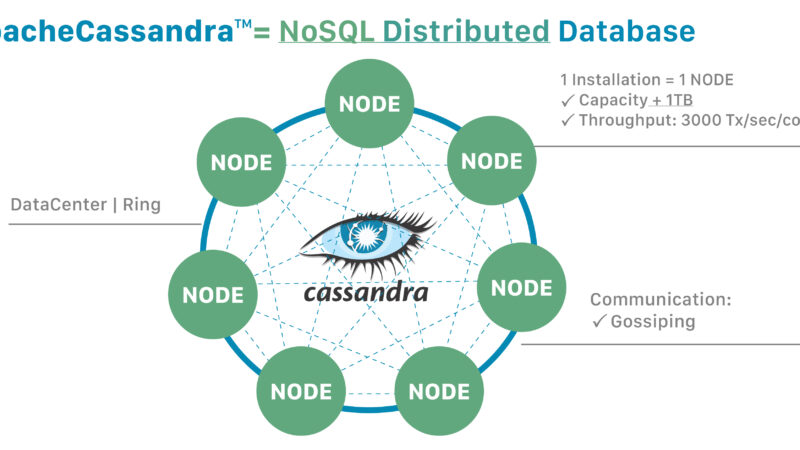

What are the types of storage in Hadoop?

They are 2, namely:

- HDFS: It facilitates sequential access of data

- NoSQL: It is ideal for random access of data, and an excellent example is the HBase

Which processing frameworks will do during Data Processing?

- Hive

- Pig

- Spark

- MapReduce

Name the various file formats supported by Hadoop

- Parquet file

- AVRO

- Sequence files

- Columnar

- JSON

- CSV

What are the methods of executing a Pig Script?

They are three, namely

- Script file

- Embedded script

- Grunt shell

Name the various components of a Hive query processor

- Operators

- Parser

- Execution engine

- Type checking

- Logical plan generation

- Physical plan generation

- Optimizer

- Semantic analyzer

- User-defined functions

Differentiate between HBase and Relational Database

The two differ in various ways, including the following;

- HBase has no schema, but that’s not the case when it comes to relation database

- A relation database is row-oriented, whereas HBase is column-oriented

- HBase supports automated partition, whereas relational database supports neither automatic partitioning nor any built-in support

- The HBase tables are sparsely populated, whereas the relational databases have thin tables

Tell us about the three core methods of any Reducer

The core methods are:

- Setup(): The method configures different parameters, including the distributed cache and the input data size

public void setup (context)

- cleanup(): Its role is to clean temporary files and should be called once towards the end of a task.

public void cleanup (context)

- reduce(): As the name suggests, this is the heart of a reducer. You should call it once for every key alongside the reduced task associated with it.

public void reduce(Key, Value, context)

What is the importance of a JobTracker?

As the name says, the JobTracker is involved with monitoring various aspects. Its rules include:

- Managing resources

- Monitoring the availability of various resources

- Managing the life cycle of various tasks not forgetting their fault tolerance and progress

- Tracking MapReduce workloads execution

- Monitoring various tasktrackers and submitting the entire task to a user

- Finding the tasktracker node that will execute a certain task best

- Communicating with NameNodes when locating data

Explain the components of the Hive Architecture

They are 4 and are as follows:

- Execution Engine: It facilitates the processing of a query while bridging the gap between Hive and Hadoop. Its communication with the metastore is usually bidirectional hence performs different tasks.

- Metastore: It stores metadata, not forgetting to transfer the same to the compiler during query execution.

- User Interface: It requests the driver’s execute interface and builds a query session too.

- Compiler: Facilitates the creation of an execution plan or reduces a job or an operation on HDFS.

Tell us about the main components of a Pig Execution Environment

These components include:

- Execution Engine

- Compiler

- Optimizer

- Parser

- Pig Scripts

What are the major components of an HBase?

The main components of an HBase include:

- ZooKeeper: It avails a distributed service for the management and coordination of the server state in a cluster. It also monitors servers’ availability and notifies users in case of a server failure.

- HMaster: It is responsible for allocating regions to the corresponding region servers to facilitate load balancing. Clients also use it during the change of a metadata operation or a schema. Last but not least, it also keeps track of a Hadoop cluster.

- Region Server: It comprises HBase tables horizontally divided into regions according to their key values. Every region server works as a worker node and handles different client requests, including read, write, update and delete.

Name the primary components of the architecture of ZooKeeper

They are 4 including;

- Server applications that provide the interface needed for the interaction between the server and the client applications

- Client applications that facilitate the interaction between the client-side applications and the distributed applications

- Node and that’s every system that is part of a cluster

- ZNode is a node, but it is different from the rest with the role of storing data versions and updates

Tell us about some uses of Hadoop

Some of the various uses of Hadoop are:

- Streaming processing

- A Hadoop computing cluster can process the brain neuronal signals of a rat

- Capturing and analyzing social media data, video, transaction and clickstream by various platforms for advertisements targeting

- To improve business performance through real-time analysis of customer data

- Accessing unstructured data, including output from financial data, clinical data, medical correspondence, imaging reports, lab results, doctor’s notes and medical devices

- Used in scientific research, cyber security, defence and intelligence, among other public sectors

- Ideal for social media platforms during the management of videos, images, posts and content

- Fraud prevention and detection

- Managing streets’ traffic

- Archiving emails and managing content

What are the major challenges of Big Data that Hadoop Solves?

They include:

- Storage due to the huge volume

- Security is important, especially when handling enterprise data

- Analyzing and deriving insights which are crucial if a company want to benefit from the big data

That’s where Hadoop comes in by making the difficult tasks as simple as possible.

What makes Hadoop an ideal solution for anyone dealing with Big Data?

The following features make Hadoop suitable for handling big data

- High storage capacity

- Cost-effectiveness

- Scalability

- Reliability

- Security

What are the challenges associated with the usage of Hadoop

Hadoop is a life saver in dealing with big data, but it has its limitations, including the following.

- Its management often proves difficult due to the complexity of its applications

- The overall performance leaves a lot to be desired since it only supports batch processing and can’t process streamed data

- Too many small files will overload the NameNode because HDFS has the issue of file size limitation.

Do you know the NameNode’s port number?

Yes, and it is 50070.

Name some data types used in MapReduce that are Hadoop-specific

They include:

- DoubleWritable

- BooleanWritable

- ObjectWritable

- FloatWritable

- IntWritable

Tell us the three ways of connecting an application when running Hive as a server.

They are:

- Thrift client

- JDBC driver

- ODBC driver

Do you know the role of a JDBC driver in a Sqoop setup?

It serves as a connector between Sqoop and various RDBMS.

Which is the default storage location of table data in Hive?

- /user/hive/warehouse

Can you tell us about deletion tombstone markers?

They can be categorized into 3 types and are as follows

- Column delete marker: It marks every version of a column during deletion

- Version delete marker: It is similar to the column delete marker but only marks a particular version of a column during deletion.

- Family delete marker: It marks every column for deletion regardless of its version.